RELIC: Interactive Video World Model with Long-Horizon Memory

Interactive world exploration given arbitrary starting images.

Abstract

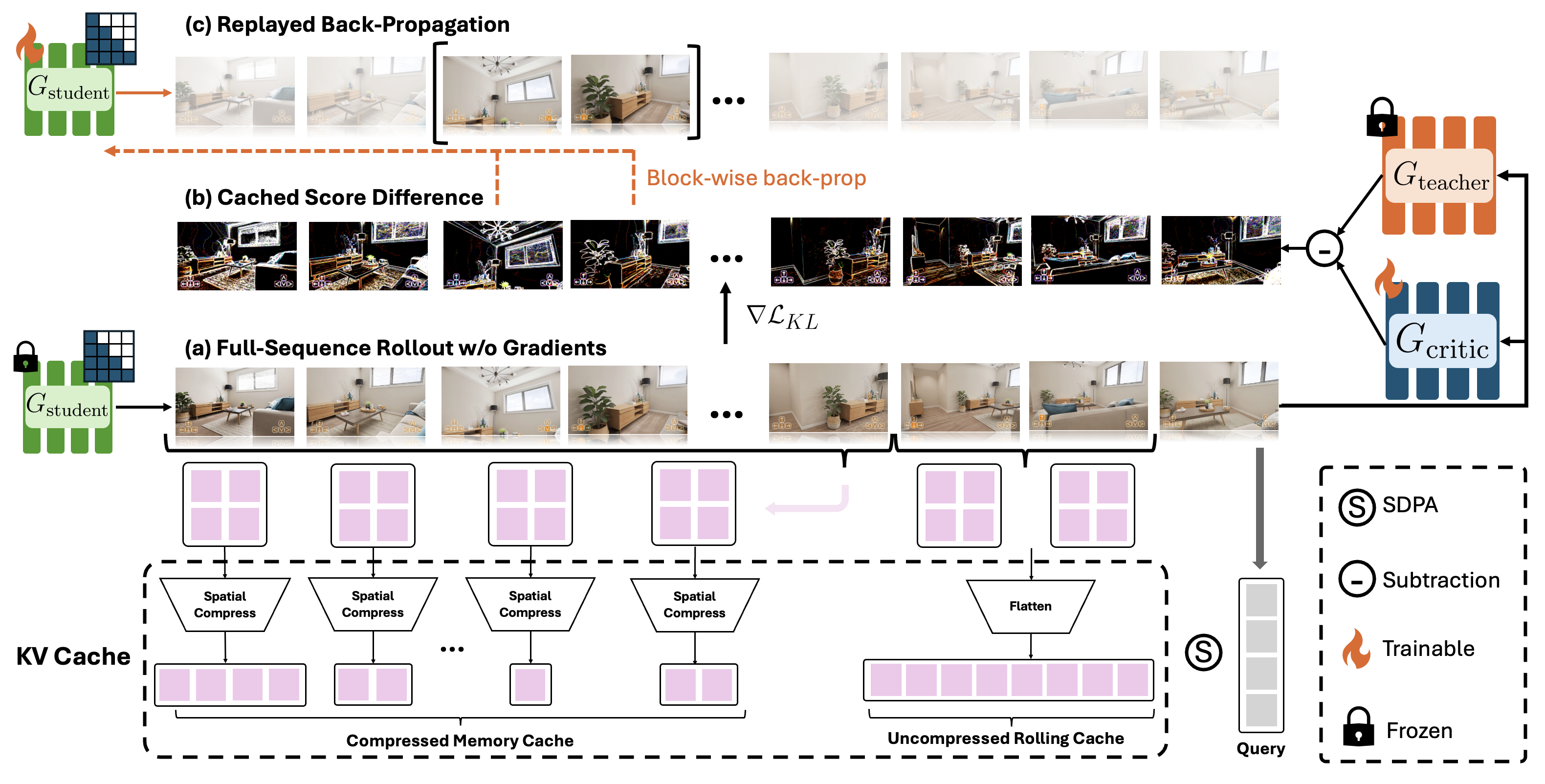

A truly interactive world model requires three key ingredients: real-time long-horizon streaming, consistent spatial memory, and precise user control. However, most existing approaches address only one of these aspects in isolation, as achieving all three simultaneously is highly challenging—for example, long-term memory mechanisms often degrade real-time performance. In this work, we present RELIC, a unified framework that tackles these three challenges altogether. Given a single image and a text description, RELIC enables memory-aware, long-duration exploration of arbitrary scenes in real time. Built upon recent autoregressive video-diffusion distillation techniques, our model represents long-horizon memory using highly compressed historical latent tokens encoded with both relative actions and absolute camera poses within the KV cache. This compact, camera-aware memory structure supports implicit 3D-consistent content retrieval and enforces long-term coherence with minimal computational overhead. In parallel, we fine-tune a bidirectional teacher video model to generate sequences beyond its original 5-second training horizon, and transform it into a causal student generator using a new memory-efficient self-forcing paradigm that enables full-context distillation over long-duration teacher as well as long student self-rollouts. Implemented as a 14B-parameter model and trained on a curated Unreal Engine–rendered dataset, RELIC achieves real-time generation at 16 FPS while demonstrating more accurate action following, more stable long-horizon streaming, and more robust spatial-memory retrieval compared with prior work. These capabilities establish RELIC as a strong foundation for the next generation of interactive world modeling.

Overview

Overview of our long-video distillation pipeline with compressed memory. A 20-second bidirectional teacher is distilled into a fast autoregressive student model using self-forcing, and memory-efficient training is enabled through replayed back-propagation. Please see our tech report for more details.

Walking into Paintings

Diverse Art Styles

Long-term Memory

Multi-Key Control

Comparison with Others

We compare RELIC with recent video world models by applying an identical control sequence to all models (shown in the first column) to evaluate their action-following capability, scene generalization, and long-term memory.

Citation

If you find our work inspiring or incorporate it into your research, please consider citing the following:

@online{RelicWorldModel2025,

author = {Yicong Hong, Yiqun Mei, Chongjian Ge, Yiran Xu, Yang Zhou, Sai Bi,

Yannick Hold-Geoffroy, Mike Roberts, Matthew Fisher, Eli Shechtman,

Kalyan Sunkavalli, Feng Liu, Zhengqi Li, Hao Tan},

title = {RELIC: Interactive Video World Models with Long-Horizon Memory},

url = {https://relic-worldmodel.github.io/},

year = {2025}

}